THR Dataset

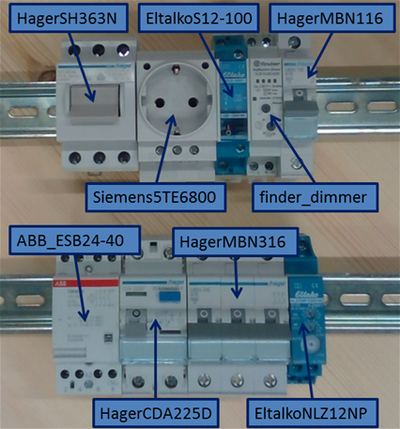

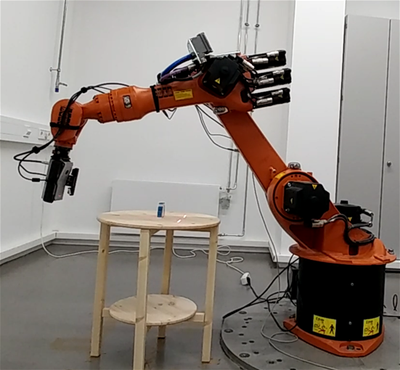

The THR (Top Hat Rail) dataset consists of RGB and depth images obtained with the Intel Real Sense SR300 (sr300) and the Asus Xtion (xtion) in combination with a KUKA industrial robot KR16. Data of nine industrial top hat rail objects and four scenes with different combinations of these objects (also double instances) were generated. The objects can easily be ordered by a user on the website of the individual manufacturer e.g. if one wants to test the performance of object recognition and manipulation in a real setup.

A laser striper is utilized for ground truth models and the SR300 was chosen as it allows for depth images in close range of up to 200mm. The object or object scene is placed onto a round table and images are obtained from different distances evenly sampled on a half-sphere. For each distance, 200 viewpoints are evenly sampled over the half spheres with different orientations which gives additional variations. The four different camera orientations (shown by the differently colored points) were used due to the robot workspace: the sensors are rotated in 90 degree steps around the viewray, orienting the images to be upright, upside down, and rotated left and right. For the single objects, images are acquired at a distance of 300mm and 600mm for the SR300 and only 600mm for the Xtion due to the working range of the sensor. For the scenes, which can be used for testing, images are acquired at a distance of 450mm for the SR300 and 600mm for the Xtion. Additionally, we provide high quality ground truth models of the single objects and objects in the scenes using a laser striper.

Download

The single files of the THR dataset can be downloaded from http://rmc.dlr.de/download/thr-dataset/

The single folders containing the data for each object (e.g. ABB_ESB24-40) or scene (e.g. scene1) are explained in the following.

For each object, 200 measurements were obtained on a half sphere around the object with different distances (approx. 300 and 600 millimeters) resulting in 2 folders:

- dist300 (sr300)

- dist600 (sr300 and xtion)

All measurements/values are given in millimeter.

For the object scenes the same 200 measurements were obtained with a different radius for the SR300 for testing:

- dist450 (sr300)

- dist600 (xtion)

Each object/scene dataset contains:

- depth: depth images XXX.png as mono16 bit pngs, these need to be multiplied with the depth scaler factor 0.124987 to obtain the values in millimeters (for higher precision)

- rgb: rgb images XXX.png

- poses: position XXX.tcp.coords of the robot's TCP as homogeneous matrix row major (the last row 0 0 0 1 is not saved)

- masks: segmentation masks are provided which represent the part of the object visible in the depth or rgb images (for xtion only one mask for both is given as here depth and color are matched), for the scenes for each individual object also an individual mask is provided

In order to get the pose of the sensor, the position of the robot's TCP needs to be multiplied with the extrinsic (hand-eye) transformation given in the calibration file. In order to receive the transformation of the depth camera for the SR300 this is camera0 and for Xtion camera1 (as for xtion color and depth have been matched, for the SR300 the transformation is given in the calibration file). The script convert2PointCloud.py gives an example on how one can calculate a point cloud from the data.

Further for each object/scene ground truth models (obtained with a laser striper) were obtained which are saved to the folder:

- model: it contains the ground truth models (downsampled and high resolution), which are centered with a ground truth homogeneous matrix transformation for the object/scene in the *.mat file

For the scenes, a ground truth mesh with transformation file is given for all objects in the scene.

The intrinsic and extrinsic camera calibration is given in:

- calibration: calibration file obtained with the DLR tool CalDe and CalLab, for details about the he calibration tool and about the calibration file format please visit DLR CalDe and CalLab.

Using the THR-Dataset?

Please cite our paper if you use the THR dataset in your research:

@INPROCEEDINGS{Durner2017dataset, title = {Experience-based Optimization of Robotic Perception}, author = {Durner, Maximilian and Kriegel, Simon and Riedel, Sebastian and Brucker, Manuel and Marton, Zoltan-Csaba and Balint-Benczedi, Ferenc and Triebel, Rudolph}, booktitle = {IEEE International Conference on Advanced Robotics (ICAR)}, month = {July}, year = {2017}, address = {Hongkong, China} }

License

All datasets on this page are copyright by us and published under the Creative Commons Attribution-ShareAlike 4.0 License (Creative Commons Attribution-ShareAlike 4.0 International License). This means that you must credit the authors in the manner specified above and you may distribute the resulting work only under the same license.