Bio-data exploitation for supportive robotics

Trying to retrieve the most benefit from the robotic systems, we are also targeting to support the human in his daily living. There are multiple scenarios where the human has limited control over his environment due to medical and health issues. Our objective is to let these people use robotic systems to interact with the environment instead of themselves. In order to facilitate highly intuitive control of robotic systems, we use bio-data from these people that we transfer to suitable control commands for the robots. Besides evaluating how to best process the data to enable easy control, we are looking for new technologies to generate this data.

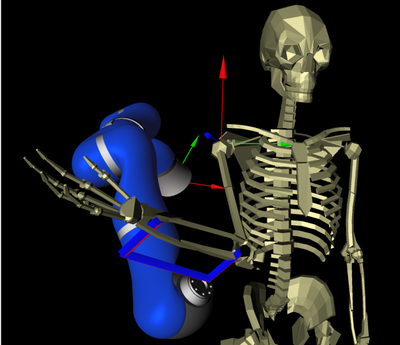

For many people with upper limb disabilities, simple activities of daily living, such as drinking, opening a door, or pushing an elevator button require the assistance of a caretaker. An assistive, robotic system controlled via a Brain-Computer-Interface (BCI) could enable these people to perform these kind of tasks autonomously again and thereby increase their independence. We investigate various methods to provide disabled people with control over the DLR Light-Weight Robot, while supporting task execution with the capabilities of a torque-controlled robot.

In our research we mainly investigate the following two interfacing techniques.

- On the one hand we investigate the use of surface electromyography (sEMG) as a non-invasive control interface e.g. for people with spinal muscular atrophy (SMA) [1]. While people with muscular atrophy are at some point no longer able to actively move their limbs, they can still activate a small number of muscle fibers. We measure this remaining muscular activity and employ machine learning methods to transform these signals in continuous control commands for the robot.

- For people with more severe disabilities, e.g. after spinal cord injury or stroke, we investigate the use of cortical interfaces, which record neural activity directly from the brain, based on the Braingate2 Neural Interface System developed at Brown University. Using this implantable BCI, we could enable a person with tetraplegia to control the DLR LWR-III ([2], [3]). In our collaborative study, a participant was able to control the robotic system and autonomously drink from a bottle for the first time after she suffered a brainstem stroke 15 years earlier.

To improve the usability of such an assistive robotic system, e.g. when grasping objects, we integrate autonomous capabilities with the robot, to be able to overcome inaccuracies in the BCI. Different schemes of shared autonomy can be incorporated, starting with grasp stability detection in order to prevent objects from unintended dropping to full autonomous reach and grasp based on vision data.

How can people with disabilities properly control a prosthetic, assistive or rehabilitation device? What do we mean by properly control? Can we achieve that at low-cost, portable, wearable, online? To answer these questions, we are working in the field of Peripheral Human-Machine Interfaces with the aim of improving the control of prosthetic robotic devices in a non-invasive and realistic way, thereby aiding people with disabilities to regain lost hand/arm functionality without the stress of surgery, drugs, hospitalisation and so on [4].

We aim at re-establishing the sensori-motor loop with the missing / injured limb. This includes feed-forward control (detecting the movement intent of the patient) and sensory feedback (transducing data to sensation). To achieve this, we study various non-invasive human-machine interfaces (surface electromyography, ultrasound, tactile and optical sensing, e.g. [5], [6] ,[7]) for feed-forward control, and investigate innovative ways of delivering sensory feedback such as, e.g., the application of force / vibration / electrical stimulation to the patient’s body.

We also focus on practically usable machine learning methods to realize this form of control [8]: incremental, online, fast; quick and easy for the patient; able to reconstruct the subject's movement intent to the best possible extent, using regression instead of classification and non-linear approaches whenever required. Our aim is simultaneous and proportional control, as opposed to sequential, discrete methods.

Our main target patients are those affected by amputations ([9], [10]), neuropathic pain, stroke, spinal cord injuries and neuromuscular degeneration conditions.

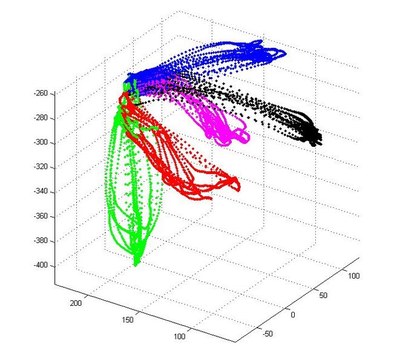

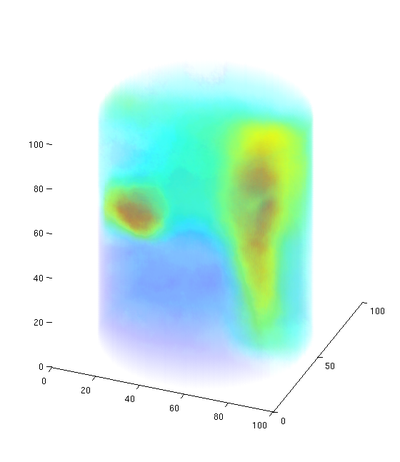

We do not only exploit bio-data, but we are also working on improving methods for bio-data acquisition. Currently surface EMG only offers a very coarse view of muscle activity as it can merely record a summation of the signals of different muscle fibers. We are working on a 3D-reconstruction method of the generating potentials [11] to see which muscles are active during a specific motion. Exploiting the crosstalk between the electrodes in large electrode arrays, we want to recover the muscle potentials of "muscles beneath muscles". Thereby, we gain a better understanding of action potentials within deeper muscular regions. Yet, we do not require invasive technology, like needle EMG. We call this method imaging electromyography, or in short: iEMG.

Each year approximately 15 million people worldwide suffer stroke for the first time. The neurological injury often leads to persisting paresis or paralysis in patients. Regaining control over their own limbs and thus maintaining independence from other people is only possible through frequent and repetitve training.

Our goal is to provide self-adjusting robots for physiotherapy of the upper limb. Through impedance control we can adjust the allowed deviation from the targeted path of motion. Thereby we can ensure “repetition without repetition” (Bernstein 1967), fostering neural plasticity and thus learning. Using different force patterns, e.g. assisting and resisting force, the training can be adjusted to the individual needs of the patient. Possible adjustments range from the type of training exercise to the variation of robotic support.

By observing the EMG-signals on the impaired arm, we can detect desired motion before the subject regains enough strength to execute it. Therefore, we can ensure that the patient is actively participating in the training and perform quantitative analysis of his progress. Moreover, we want to identify the areas in the workspace that need more training by analyzing the EMG and thus automatically adjusting the exercises, e.g. by focusing on specific areas of the human workspace.

Further, observing the non-impaired arm the robot will be able to support the impaired limb in copying the motion of the other arm to facilitate bilateral movement. This promotes the cortical reorganization and gives the patient the chance to have more control over the training and consider his own needs.

[1] Vogel, Jörn and Bayer, Justin and van der Smagt, Patrick (2013) Continuous robot control using surface electromyography of atrophic muscles. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 845-850. IEEE. International Conference on Intelligent Robots and Systems (IROS), 3.-7.11.2013, Tokyo, Japan.

[2] Vogel, Jörn and Haddadin, Sami and Simeral, J D and Stavisky, S D and Bacher, D and Hochberg, L R and Donoghue, J P and van der Smagt, Patrick (2010) Continuous Control of the DLR Light-weight Robot III by a human with tetraplegia using the BrainGate2 Neural Interface System. In: 12th International Symposium on Experimental Robotics (ISER). Springer-Verlag Berlin Heidelberg. The 12th International Symposium on Experimental Robotics, Montreal.

[3] Hochberg, Leigh R. and Bacher, Daniel and Jarosiewicz, Beata and Masse, Nicolas Y. and Simeral, John D. and Vogel, Joern and Haddadin, Sami and Liu, Jie and Cash, Sydney S. and van der Smagt, Patrick and Donoghue, John P. (2012) Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature, 485 (7398), pp. 372-375. Nature publishing group. DOI: 10.1038/nature11076.

[4] Castellini, Claudio and Patrick, van der Smagt (2008) Surface EMG in advanced hand prosthetics. Biological Cybernetics, Volume (Number), pp. 35-47. Springer Berlin / Heidelberg.

[5] Gijsberts, A.; Bohra, R.; González, D. S.; Werner, A.; Nowak, M.; Caputo, B.; Roa, M. & Castellini, C. Stable myoelectric control of a hand prosthesis using non-linear incremental learning Frontiers in Neurorobotics, 2014, 8

[6] González, D. S. & Castellini, C. A realistic implementation of ultrasound imaging as a human-machine interface for upper-limb amputees Frontiers in Neurorobotics, 2013, 7

[7] Castellini, C. & Kõiva, R. Using a high spatial resolution tactile sensor for intention detection Proceedings of ICORR - International Conference on Rehabilitation Robotics, 2013, 1-7

[8] Castellini, C.; Fiorilla, A. E. & Sandini, G. Multi-subject / Daily-Life Activity EMG-based control of mechanical hands Journal of Neuroengineering and Rehabilitation, 2009, 6

[9] Castellini, C.; Gruppioni, E.; Davalli, A. & Sandini, G. Fine detection of grasp force and posture by amputees via surface electromyography Journal of Physiology (Paris), 2009, 103, 255-262

[10] Castellini, Claudio and van der Smagt, Patrick (2013) Evidence of muscle synergies during human grasping. Biological Cybernetics, 107 (2), pp. 233-245. Springer. DOI: 10.1007/s00422-013-0548-4. ISSN 0340-1200.

[11] Holger Urbanek, Verfahren zur rechnergestützten Verarbeitung von mit einer Mehrzahl von Elektroden gemessenen Aktionspotentialen des menschlichen oder tierischen Körpers, 2012, Patent: DE102012211799A1