Autonomous Vision-based Navigation of the Nanokhod Rover (2004)

Autonomous planetary exploration will play an important role in future space missions. After the success of the Mars Pathfinder Mission, a lot of work has been started to overcome typical limitations of mobile vehicles, such as the lack of local autonomy at the Sojourner mission. Due to a very large ime delay concerning the data link between ground and space segment (approx. 20 min transit time) and typical limitations of communication bandwidth in space, on-line control is not feasible. Thus, the Rover motion commands have to be formulated at a very abstract level. In our approach, a list of way-points, determined by a path planer on ground, will be uploaded to the space segment for autonomous execution on site. Whereas most of the efforts have been done in the field of path planning, the need for an on-line navigation technique based on mission specific components has not been discussed in depth so far. To fill this gap, a mission scenario has been designed for this study which focuses on the autonomous motion control of a small Rover vehicle. The essential element for achieving this autonomy is the precise localization of the Rover without help from ground and the capability to cope with non-nominal situations independently. Due to unknown parameters, such as soil characteristics, a dead reckoning approach, based for example only on odometry data, will fail. To track the Rover on its way, a more robust 3D-localization technique is necessary. In this feasibility study, we propose a vision based approach for Rover localization and guidance by a stationary Lander module. A cyclical monitoring of the Rover pose and an appropriate interaction between Rover and Lander forms the basis for reaching the desired target position autonomously.

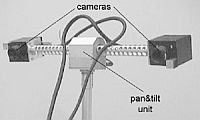

The PSPE (Payload Support for Planetary Exploration) ground control system provides an easy-to-use command interface, optimized for intuitive support of scientific experiment without requiring specific knowledge in the field of robotics. All is based on a terrain model reconstructed on ground from the stereoscopic panorama images taken by the imaging head during landing site exploration. A mission specialist selects interesting sites or objects in the terrain model, to be explored by the Nanokhod Rover– the so-called ‘long arm’ of the Lander system. A path planning algorithm determines the optimal path with careful attention to given constraints (e.g. topography, estimated soil and known Nanokhod-Rover characteristics).

Publications

Bernhard-Michael Steinmetz, Klaus Arbter, Bernhard Brunner, Klaus Landzettel: Autonomous Vision-based Navigation of the Nanokhod Rover, Proc. i-SAIRAS 2001, Montreal, June 18-22 2001

K. Landzettel, B.-M. Steinmetz, B. Brunner, K. Arbter, M. Pollefeys, M. Vergauven, R. Moreas, F. Xu, L. Steinecke and B. Fontaine: A micro-rover navigation and control system for autonomous planetary exploration. Advanced Robotics, Vol. 18, No. 3, pp. 285-314 (2004)