Interactive Skill Learning

ISL is a research group in the department of cognitive robotics.

Many robots are general-purpose systems, designed to be able to perform a wide variety of tasks. But they can only do so in practice if they also have a wide variety of skills for solving those tasks. The aim of the "Interactive Skill Learning" group is to develop methods that enable robots to quickly and effectively acquire new skills, through programming by demonstration, reinforcement learning, and intuitive programming.

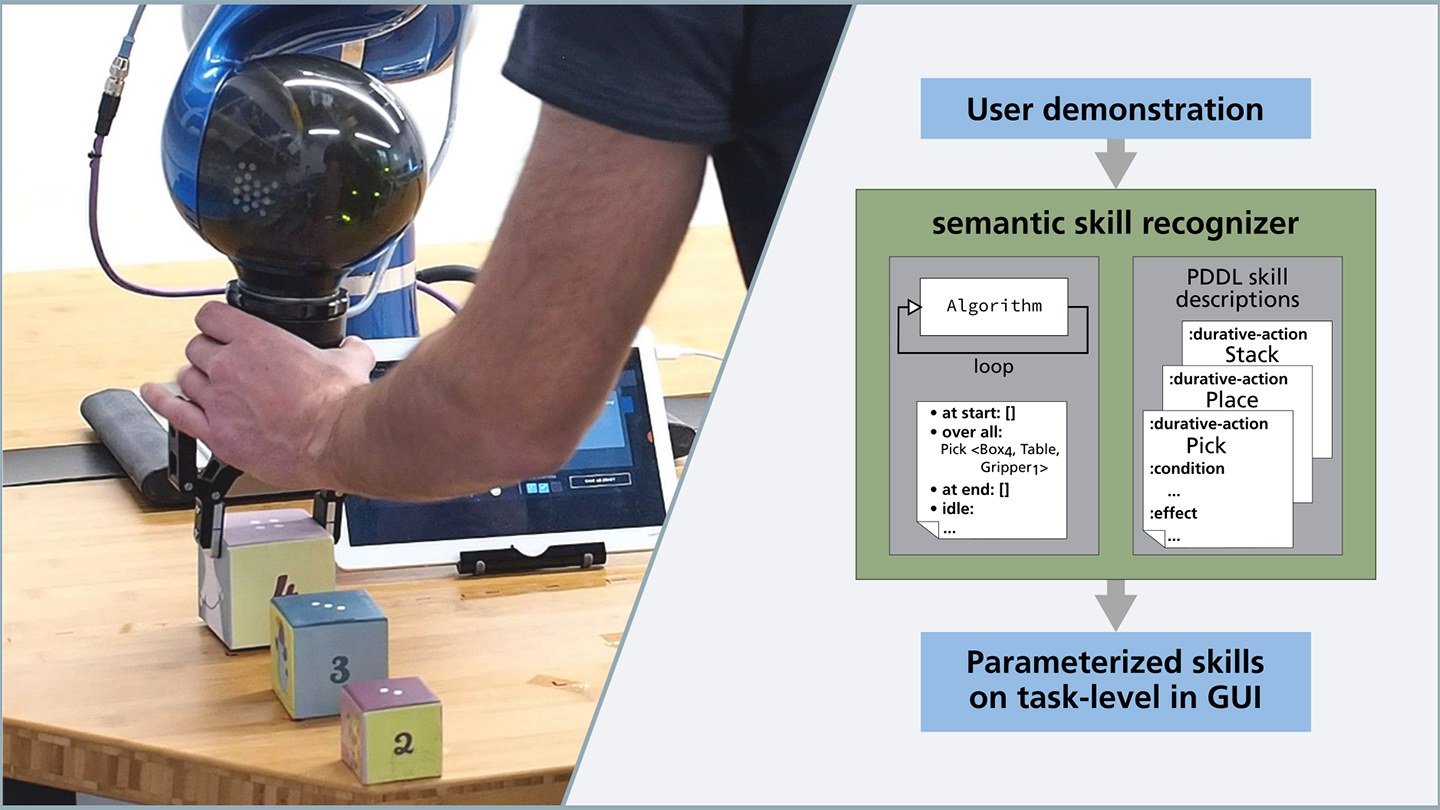

To make skill acquisition quick and effective, we believe that robots must be able to acquire their skills through a combination of prior knowledge and data-driven methods. Therefore, we aim at enabling humans with different levels of robotic expertise to transfer their knowledge and experience about skills and tasks to the robot. For instance, we develop methods for robotic experts to specify their knowledge about task constraints as virtual fixtures, geometric primitives, and manifolds. An example of such a constraint in everyday life "hold a cup upright if it is not empty". For users without expertise in robotics, we investigate methods that allow such constraints to be extracted from demonstrations, and or to be specified via an intuitive graphical interface. Such explicit constraints generalize well to novel tasks or even to different robots, rather than using statistical representations alone.

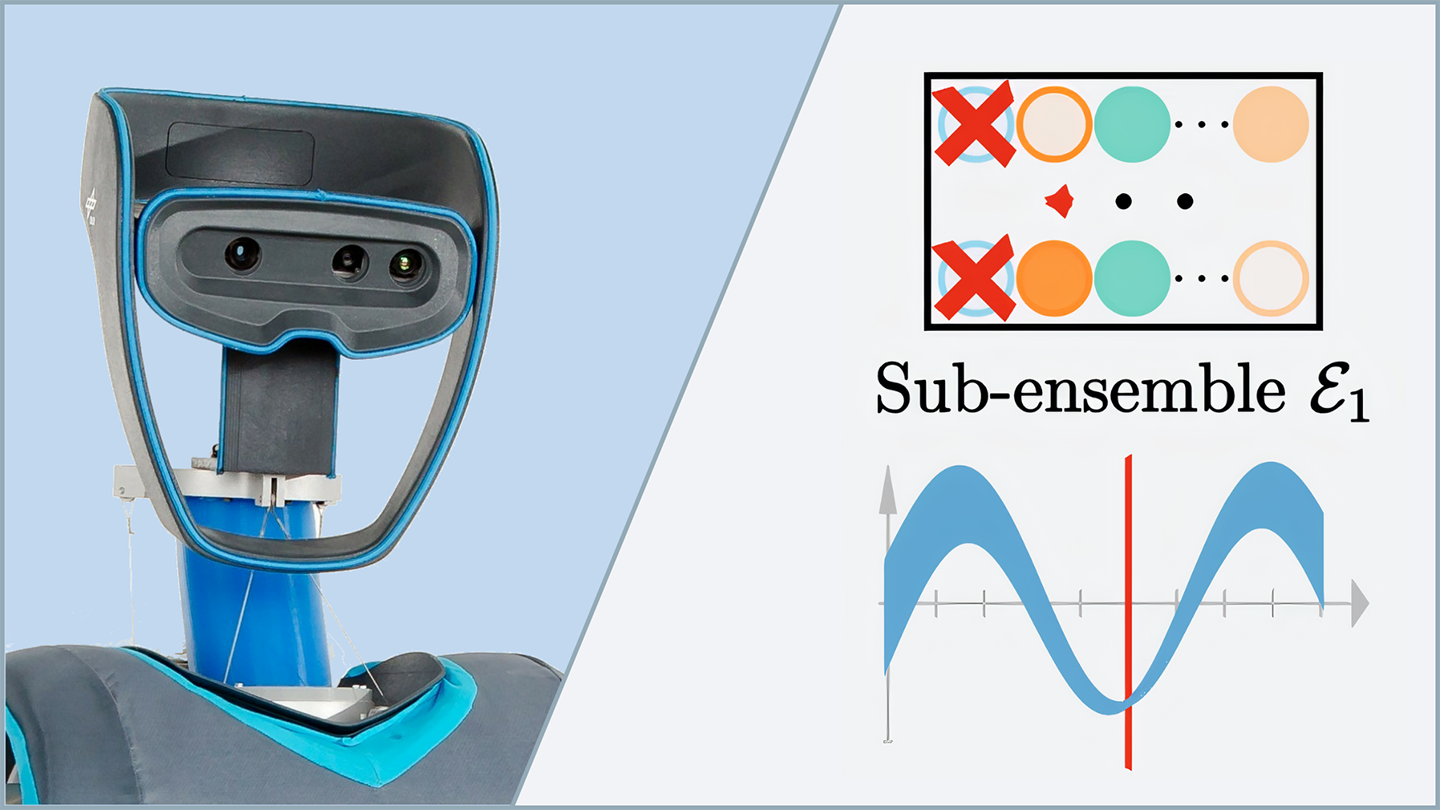

Once robots have acquired skills for a particular task, they should improve these skills over time, in terms of speed, energy-efficiency and robustness. To do so, we use black-box optimization and reinforcement learning. An important pillar of our research is that learning and exploration are purposeful, i.e. that the search space is explored efficiently with the help of prior knowledge - again specified by humans. As humans have a lot of prior commonsense knowledge about how to solve tasks, we do not subscribe to learning from scratch on robots. What is known, need not be learned.

Humans are particularly good at understanding how to break down a large task into smaller subtasks. To transfer this knowledge, we provide intuitive graphical tools for skill and task programming. These tools are used to initialize skills, define tasks, and monitor the system during execution. The challenge here is to make the system transparent, find a consistent mental model, increase self-descriptiveness, and reduce the workload. Different interaction modalities are to be integrated in a goal-oriented way and according to user preferences. A dynamically adaptable degree of autonomy should increase user acceptance. This is supported by user intention recognition and continuous system feedback.