Robotic Work Cell Exploration

The Problem

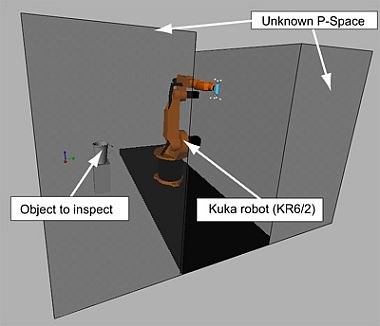

The Multisensory 3D-Modeller, developed at the Institute of Robotics and Mechatronics, acquires 3-D information about the environment. This device consists of a 7-dof passive manipulator mounted with four different types of sensors: a light-stripe sensor, a laser-range scanner, a passive stereo vision sensor, and a texture sensor. Experiences gained in the development of this passive Eye-in-Hand system led to the idea of automating the execution of inspection tasks. The outcome will be a robotic system that is capable of automated inspection tasks, such as complete surface acquisition in 3-D environments. As the robot moves in a priori unknown environments, it is fundamental for the surface acquisition task that the robotic system acquires knowledge of manoeuvrable space, i.e. a good knowledge of the robot's configuration space, or C-space, especially around the object to be inspected. The idea to integrate realistic noisy sensor models in the view planning stage occurred in this context. The information about sensor noise can be used for merging multiple readings, evading contradictory data sets and giving hints for the next sensing action to be taken by the robot.

METHODS

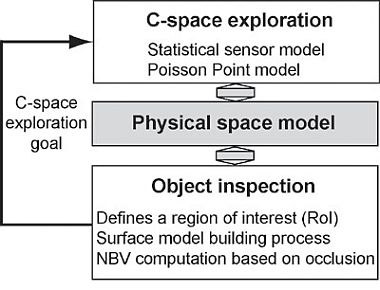

Recent work at the Robotics Lab, Simon Fraser University, Canada has focussed on sensor-based motion planning for robots with non-trivial geometry and kinematics. The problem here is to plan the next best view (NBV, or simply view planning), in order to efficiently explore the robot's C-space. The notion of C-space entropy was developed as a measure of ignorance of C-space. Then the NBV is chosen as the one that can maximally reduce the expected C-space entropy.

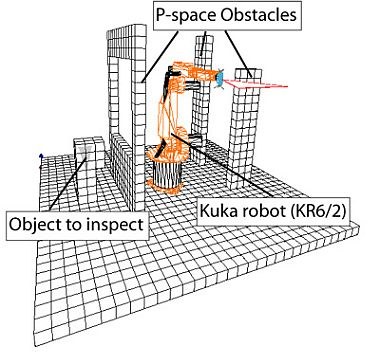

The research focuses on sensor-based approaches to robotic exploration of partly unknown environments. Aiming at facilitating automated work processes in flexible work cells, an efficient and reliable task-dependent exploration is performed by integration of flexible sensor systems, probabilistic environment representations, view and motion planning designed to acquire a maximum amount of knowledge while using a minimal number of view points, and the fusion of information. Safety for motion planning is achieved by multisensory data acquisition. The proposed methods and sensors are evaluated in 3-D simulations. Experiments for exploration of work space, regions of interest in physical space, and combined missions are successfully performed. The methods, considering environment uncertainty in the planning process, enable flexible information gain-driven missions such as view planning for object recognition or grasp planning.

However, in the above work, an ideal sensor model was assumed while real sensors are subject to noise. Therefore, more caution must be taken using results from this ideal sensing process, e.g. a safety margin to obstacles must be added. However, if we can model the sensor noise already in the planning stage, our results will be much more accurate and reliable. This will enable the robot to go much closer to the obstacles to achieve high sensing accuracy, a must for surface inspection tasks. Additionally, if one is using multiple sensors for the exploration task (multisensory exploration), the same noisy stochastic sensor models can be used not only merging multiple readings but also view planning and exploration decisions such as where to scan and which sensor to choose, thus providing one integrated framework.

RESULTS

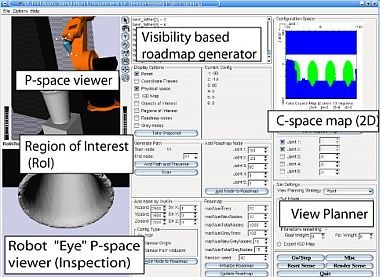

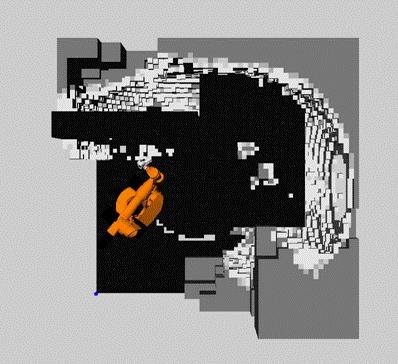

Simulations with a two-link robot showed promising results, a simulation environment for n-Dof robots is currently developed. In order to reduce the amount of memory consumed, a visibility-based roadmap is implemented. The robot used is a 6-Dof Kuka KR6, with the new release of the Multisensory 3D-Modeller, which will be suitable for robot and manual guidance. Multiple View Planning approaches show already good exploration results in 3D, examples for the scene given below and exploration results.

References

- M. Suppa, Autonomous Robot Work Cell Exploration Using Multisensory Eye-in-Hand Systems, Hochschulschrift: Hannover, Univ., Dissertation, 2007 [elib] (Parallel als Buch-Ausgabe erschienen: Fortschritt-Berichte VDI Reihe 8, Mess-, Steuerungs- und Regelungstechnik; Nr. 1143, ISBN: 978-3-18-514308-3).

- R. Burger, Anbindung und Evaluierung von Sensorik zur Erzeugung von Umgebungsmodellen in Robotersteuerungen, Technical Report, DLR-IB 515-2007/15, 2007.

- T. Bodenmueller, W. Sepp, M. Suppa, and G. Hirzinger, Tackling Multisensory 3D Data Acquisition and Fusion, In: Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, U.S.A., 2007.

- M. Suppa, S. Kielhoefer, J. Langwald, F. Hacker, K. H. Strobl, and G. Hirzinger, The 3D-Modeller: A Multi-Purpose Vision Platform, In: Proceedings of International Conference on Robotics and Automation (ICRA), Rome, Italy, 2007.

- M. Suppa and G. Hirzinger, Multisensorielle Exploration von Roboterarbeitsraeumen, TM-Technisches Messen, 2007, Vol. 74[3], pages 139-146, 2006.

- M. Suppa and G. Hirzinger, Multisensorielle Exploration von Roboterarbeitsraeumen, In: Informationsfusion in der Mess- und Sensortechnik, J. Beyerer, F. Puente Leon, and K.-D. Sommer (Hrsg.), 2006.

- M. Suppa and G. Hirzinger, Ein Hand-Auge-System zur multisensoriellen Rekonstruktion von 3D-Modellen in der Robotik, In: at-Automatisierungstechnik, Vol. 53[7], pages 323-331, 2005.

- M. Suppa and G. Hirzinger, A Novel System Approach to Multisensory Data Acquisition, In: Proceedings of the 8th Conference on Intelligent Autonomous Systems IAS-8, Amsterdam, The Netherlands, 2004.

- M. Suppa, P. Wang, K. Gupta, and G. Hirzinger, C-space Exploration Using Noisy Sensor Models, In: Proceedings of the International Conference on Robotics and Automation ICRA 2004, pages 4777-4782, New Orleans, U.S.A., 2004.

- Wang, P., Gupta, K., View Planning via Maximal C-Space Entropy Reduction, Fifth International Workshop on Algorithmic Foundations of Robotics WAFR 2002, Nice, France, 2002.