Haptic Rendering: Collision Detection and Response

Nowadays, virtual reality technologies are able to generate realistic images of virtual scenes. The next step towards increasing the level of reality is the intuitive manipulation of objects in a virtual scene, while feeding back haptic collision information to the human user.

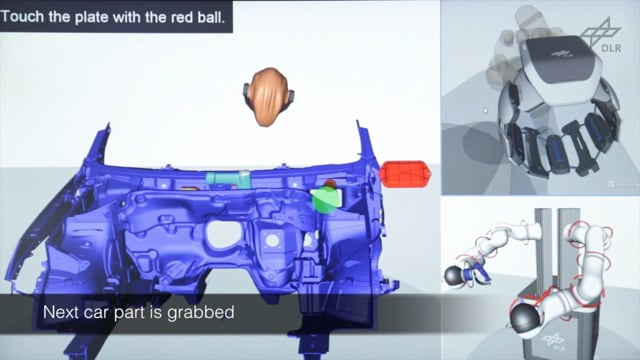

The telepresence and virtual reality group of the German Aerospace Center develops haptic rendering algorithms that enable such realtime virtual reality manipulation scenarios. The user can manipulate complex virtual objects via our bimanual haptic interface HUG; whenever objects collide against each other, the user perceives the corresponding collision forces. A use case is given in the following car part assembly video.

A Platform for Bimanual Virtual Assembly Training with Haptic Feedback in Large Multi-Object Environments

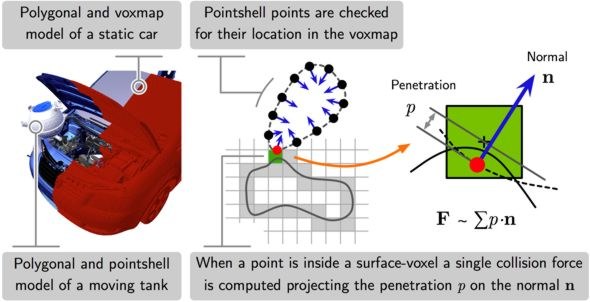

In contrast to visual rendering, which only requires update rates of at least 30Hz for smooth visual feedback, haptic signals must be updated at a challenging rate of 1000Hz to obtain stable and realistic haptic feedback. We use an algorithm based on two data structures: voxelized distance fields (voxmaps) and point-sphere hierarchies (pointshells). Our work is inspired by the haptic rendering approach introduced by the Voxmap-PointShell (VPS) Algorithm, which allows for realtime collision feedback even with objects consisting of several millions of triangles.

By using virtual reality scenarios with such haptic rendering technologies it is possible, for instance,

- to check in early stages of a product design whether different parts can be assembled optimally,

- to integrate the knowledge of manufacturers that build the final product into the product engineering steps,

- and to train mechanics in order to prepare them for future complex assembly tasks with fragile objects.

Related Interesting Links

Student Project Offers

- Satellite On-Orbit Servicing in Virtual Reality with Haptic Feedback

- Improvements of Data Structures for Collision Detection and Force Computation

- Haptic Rendering for Virtual Reality: Evaluation and Improvements

Selected Publications

- M. Sagardia, A. Martín, T. Hulin: Realtime Collision Avoidance for Mechanisms with Complex Geometries (Video), IEEE VR, 2018

- M. Sagardia, T. Hulin: Multimodal Evaluation of the Differences between Real and Virtual Assemblies, IEEE Transactions on Haptics (ToH), 2017

- M. Sagardia, T. Hulin: A Fast and Robust Six-DoF God Object Heuristic for Haptic Rendering of Complex Models with Friction, ACM VRST 2016, 2016, Munich, Germany

- M. Sagardia, K. Hertkorn, T. Hulin, S. Schätzle, R. Wolff, J. Hummel, J. Dodiya, A. Gerndt: VR-OOS: The DLR's Virtual Reality Simulator for Telerobotic On-Orbit Servicing with Haptic Feedback, IEEE Aerospace Conference, Big Sky, Montana, USA, 2015

- M. Sagardia, T. Hulin: Fast and Accurate Distance, Penetration, and Collision Queries Using Point-Sphere Trees and Distance Fields, SIGGRAPH, 2013

- M. Sagardia, B. Weber, T. Hulin, C. Preusche, G. Hirzinger: Evaluation of Visual and Force Feedback in Virtual Assembly Verifications, IEEE VR (Honorable Mention), 2012

- R. Weller, M. Sagardia, D. Mainzer, T. Hulin, G. Zachmann, C. Preusche: A Benchmarking Suite for 6-DOF Real Time Collision Response Algorithms, VRST, 2010

- M. Sagardia, T. Hulin, C. Preusche, G. Hirzinger: Improvements of the Voxmap-PointShell Algorithm — Fast Generation of Haptic Data-Structures, IWK, 2008