Analyzing Scenes on a Supporting Plane (2004)

The Problem

Suppose we constrain objects from a known set to stand in certain `upright' poses on a supporting plane, for instance, chairs and tables on the floor, or glasses and bottles on a table. The orientation of such planes is often known, as for a mobile robot in flat terrain. If the plane orientation is known, the space of object poses that has to be searched for analyzing such a scene is reduced to at most 4 dimensions (3 for translation in physical space, 1 for rotation about an axis) per object. The pose space is further reduced to 3 dimensions, if the height of the plane is known, as for a robot's camera system mounted at a certain height above the ground or table.

In such reduced parameter spaces, a global search for a scene interpretation may be feasible. Moreover, the particular nature of stereo data of such scenes may be exploited by very efficient algorithms that implement a computational shortcut and a good approximation to a full maximum-likelihood interpretation.

Methods

We use a three-camera system (Digiclops, Point Grey Research Inc.) to perform stereo processing with a horizontal and a vertical stereo pair (baseline 10 cm). Each image has a resolution of 640x480 pixels. The algorithm employed is a straightforward local correspondence search by minimizing the sum of absolute differences over square patches of the LoG-filtered (Laplacian-of-Gaussian filter) images. We have set a high threshold for valid regions of the LoG-filtered images, such that only regions of high scene contrast are taken into account. As a result, mainly surface creases, sharp bends, and depth discontinuities contribute to further processing. The output from stereo processing thus is a sparse representation of the scene by rather few 3D-data points, outlining the objects on the table.

The sparse scene representation that we obtain from stereo processing has two major advantages for the processing steps to follow: it keeps the computational load low and it allows for a reliable scene interpretation by simple methods adapted from fuzzy-set and probability theory. It turns out that objects standing on a weakly textured plane, like our table, are visually well described by a few 3D-data points restricted to high-contrast regions of the scene.

The object models used for scene analysis are derived from empirically sampled histograms of the stereo data produced by the objects of interest. The models describe the probability for a data point at a specific position in data space to arise from a specific object with specific pose. In fuzzy-set theory, they would be called the `membership function for data points' of the specific object and pose. Traditional fuzzy logic, however, is independent of any probabilistic interpretation of membership values.

Search and optimization of the match of all object models to the stereo data across all possible pose parameters is efficiently implemented by a fuzzy variant of the generalized Hough transform.

We have found that the described method for scene interpretation can cope with challenging objects such as glasses under partial occlusion and over large variations of lighting (daylight, fluorescent light, and spotlight).

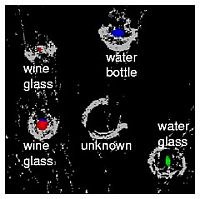

Results An example scene features a water bottle, two wine glasses, a water glass, and a cup on a table, as seen in the image taken by one of the three cameras. |

The 3D-data points obtained from stereo processing of that scene are here viewed from the close left table corner. The objects are mainly represented by contour points. Note the large amount of background data that arise as artifacts from stereo processing under such difficult conditions (transparent objects). This data set comprises 21,043 points. |

Shown here are the data points projected onto the table with color labeling of the regions with evidence for the different objects: blue for the water bottle, red for the wine glasses, and green for the water glass. The cup is included in the scene as an outlier that is not sought, i.e., the system has no model of cups. The spurious evidence for a water bottle near the position of one of the wine glasses is suppressed by the larger evidence for a wine glass at that position. In fact, all sought objects are correctly located and identified, while the system is not confused by the presence of the cup. It may thus be marked as an unknown obstacle.

The approach to scene analysis sketched here is taken for an experimental service robot system, the Robutler.

Publications

Ulrich Hillenbrand. On the relation between probabilistic inference and fuzzy sets in visual scene analysis. Pattern Recognition Letters 25 (2004), pp. 1691-1699.

Ulrich Hillenbrand, Bernhard Brunner, Christoph Borst, and Gerd Hirzinger. The Robutler: a vision-controlled hand-arm system for manipulating bottles and glasses. Proceedings International Symposium on Robotics — ISR 2004.

Ulrich Hillenbrand, Christian Ott, Bernhard Brunner, Christoph Borst, and Gerd Hirzinger. Towards service robots for the human environment: the Robutler. Proceedings Mechatronics & Robotics — MechRob 2004, pp. 1497-1502.