AI and Control

Design of control algorithms is our principal means to help complex dynamic systems like robots, aircraft, cars, launchers, etc. fulfill their intended functions. These functions may vary from basic stabilizing or damping oscillatory dynamics to suppressing the effect of disturbances, accurate tracking of multiple references, all the way to very complex tasks or sequences of tasks to be performed more or less autonomously. Well-known examples are autopilots that contain functions to make an aircraft automatically track speed, course, and altitude references, or robots assembling products in a factory.

Feedback Control

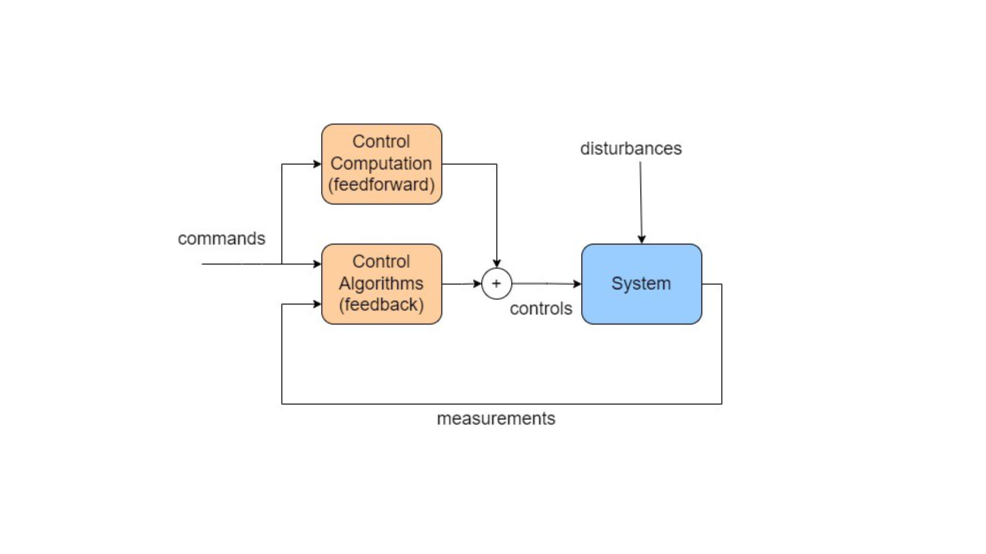

The core of such functions is mostly based on feedback control. This means that control commands are continuously computed and applied to the system, based on the current state of motion of the system as registered by sensors (see the figure above). The great thing about feedback control is that it inherently incorporates corrective measures in case the dynamic system is disturbed by external influences, or if the dynamic behavior of the system is not exactly known in advance (which obviously is mostly the case).

The most interesting thing about feedback control is that there are a lot of ways of wrong-doing, resulting in missing any performance targets all the way to unstable behavior and wrecking the system as a result! Especially for highly dynamic systems like cars, aircraft or launchers, a structured and careful approach must be taken to make sure the system shows good functional performance in a safe way.

This approach is based on control engineering, a dedicated discipline that comes with very useful tools to analyze dynamic system behavior, as well as useful metrics for designing and assessing control algorithms. For the actual design of these algorithms, a continuously growing palette of methods is available, each with their specific strengths. Our institute is dedicated to the field of control engineering and one of our core research topics is in developing new methods and/or advancing these to maturity, enabling their use to design ever more complex systems.

The design of control algorithms nearly always requires the dynamic behavior to be captured in the form of a simulation model. Even if the control method does not need it, verification via simulation is often way cheaper than testing (e.g. on aircraft). The control algorithms themselves are usually composed graphically, using so-called block diagrams in tools like Matlab/Simulink or Modelica. The blocks may arise from some mathematical control law synthesis method, or may be parametrized filters, gains, time constants that may be tuned using multi-objective optimization to meet aforementioned requirements. The translation from a block diagram into software that may be implemented on a computer within the actual system, is often automated by means of code generation tools.

As already mentioned, we spend a lot of our research on advancing and improving control methods. Here are some that we have been working on recently:

Inversion-based control

A control system nearly always needs to be tuned for a specific type of system. Inversion-based control captures most of the system dependency by means of inverse model equations. Adapting controllers from one system to the other is to a large extent limited to inserting model equations of the new system. We can do this automatically from models defined in the Modelica language, which safes a lot of work. Additional advantages are automatic adaptation to nonlinear or varying dynamics, and decoupling if different commands have to be tracked simultaneously. We also developed ways to replace model computations with acceleration and control deflection measurements and were first to bring such so-called “incremental methods” to application in flight.

Robust control

This area comes is the most comprehensive extension of classical control theory (which development started in the beginning of the 20th century) to systems with multiple controls and multiple sensors, aiming at tracking multiple variables simultaneously. It allows to address uncertainties in the system explicitly. This is great, as one can balance performance (accuracy, agility, etc.) against level of uncertainty.

Intelligent control

This is a fast growing field using methods from artificial intelligence. It allows control functions, or sub-functions, to learn online and improve dynamic behaviour of the system in the process. This is longer term research, but the opportunities seem endless. We already implemented methods on cars and even aircraft in flight tests, with very promising results!

Optimization

We use a lot of optimization in our design processes, mainly as a great helper to remove manual trial and error in trying to meet many requirements simultaneously. We even use on-line optimization in systems (cars) to improve controller performance or find best trajectories for robots during their application or for fuel efficient routes for aircraft.

Observer algorithms

Sensors not always directly tell what we want to monitor or measure. For this reason, we design observers that estimate desired variables from available sensor measurements and knowledge about the system. We develop very smart algorithms to estimate motion states of cars, or even loads that occur on aircraft in flight.

In bringing new methods to implementation and test, we come across all constraints of aspects of making new ideas work and even allows us to estimate required efforts to actually make software certifiable with a target system (indispensable when used on aircraft or in cars). That is why we perform flight tests on aircraft, perform driving tests with cars, etc.

The combination of excellent system knowledge, deep understanding of control systems, and experience to make control algorithms work on all kinds of systems are unique strengths of our institute!