Sensor Fusion and Environmental Perception

Safe flight in any scenario requires situational awareness that considers the characteristics of the environment. Above all, this means that the aircraft must be able to independently recognize and avoid hazards even if radio communication is interrupted and outside of visual contact with the pilot. This is made possible by capturing the environmental features with cameras, laser scanners and other sensors and by applying intelligent algorithms to integrate the environmental characteristics into the flight control system. The methods to be applied are related to those in the fields of robotics and automation technology, but must be adapted to the special requirements of unmanned aerial vehicles. For the evaluation of new methods, the image and sensor data processing system were integrated into the simulation environment and into the flight control.

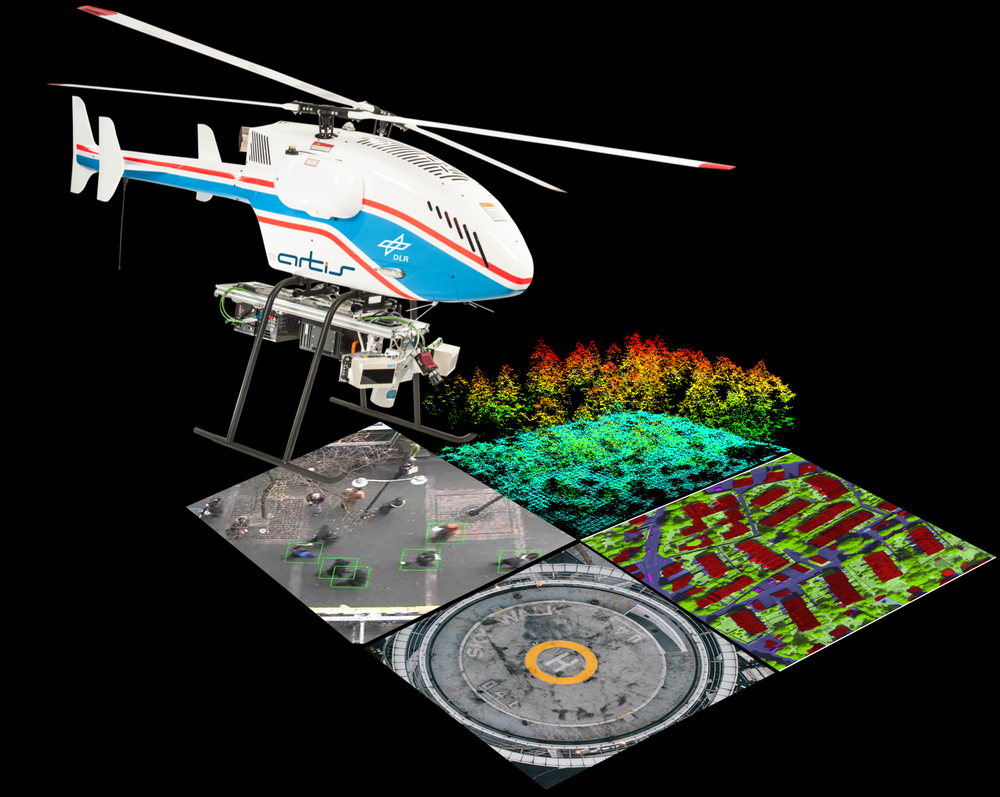

The fusion of data from satellite navigation, camera, laser and inertial sensors and the integration of the entire sensor data processing into a reliably flying overall system is of increasing importance. The environment sensors are used, among other things, to detect hazards and the movement of the aircraft itself. In addition, sensors are used as payloads for further applications such as environmental cartography.

The research topics developed in recent years and already tested with unmanned helicopters include:

- improving flight state estimation, reducing inertial drift and stabilizing (hover) flight even in the event of errors and failure of satellite navigation by determining proper motion and position using mono and stereo image data,

- automatic search and tracking of moving ground objects,

- aerial photography with intelligent camera control and automatic assembly of aerial photographs into a map,

- detection and mapping of hazards such as buildings in real time with stereo- and laser-based distance measurements, and collision avoidance with detected, previously unknown obstacles.

Furthermore, synergies with manned aviation and space flight topics arise. Examples are the detection of other aircraft for improved automatic collision avoidance (sense/see-and-avoid) and the evaluation of optical navigation and mapping procedures for automatic precision landing on the moon and other celestial bodies.