Sensor and environmental perception

The safe operation of unmanned aerial vehicles (UAVs) in a wide range of scenarios requires comprehensive situational awareness. This includes the ability to accurately perceive the environment and make autonomous decisions based on the collected data. In particular, in the event of a communication failure or when operating beyond the visual line of sight of the pilots, UAVs must be capable of reliably detecting and avoiding hazards.

To achieve this, environmental features are captured using cameras, laser scanners, and other sensors, with the gathered information seamlessly integrated into the flight control system through intelligent algorithms. A key component of this process is sensor data fusion – combining inputs from satellite navigation, camera, laser, and inertial sensors into a reliable overall system. This system serves both for hazard detection and for determining the UAV's own motion.

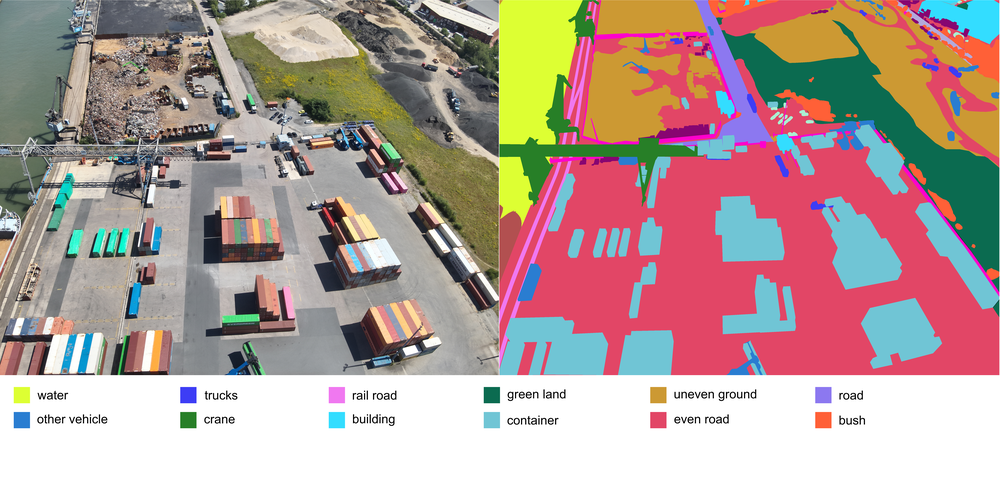

Environmental sensors also enable applications such as environmental mapping and automatic collision avoidance. The underlying methods originate from robotics and automation technology and have been specifically adapted to meet the requirements of unmanned aerial vehicles. To support the development and evaluation of these technologies, an image and sensor data processing system has been integrated into both the simulation environment and the flight control system.

A central component of our work is the testing of the developed technologies using UAV prototypes in realistic flight trials. These tests allow us to evaluate the performance of algorithms and systems under practical conditions and to continuously optimize them. To ensure maximum flexibility when integrating or switching sensors, the prototypes are equipped with special payload rails. These modular mounting systems make it easy to install and exchange various sensors such as cameras, laser scanners, or other measuring devices. This enables rapid testing of different configurations, significantly increasing the efficiency and versatility of the development process.

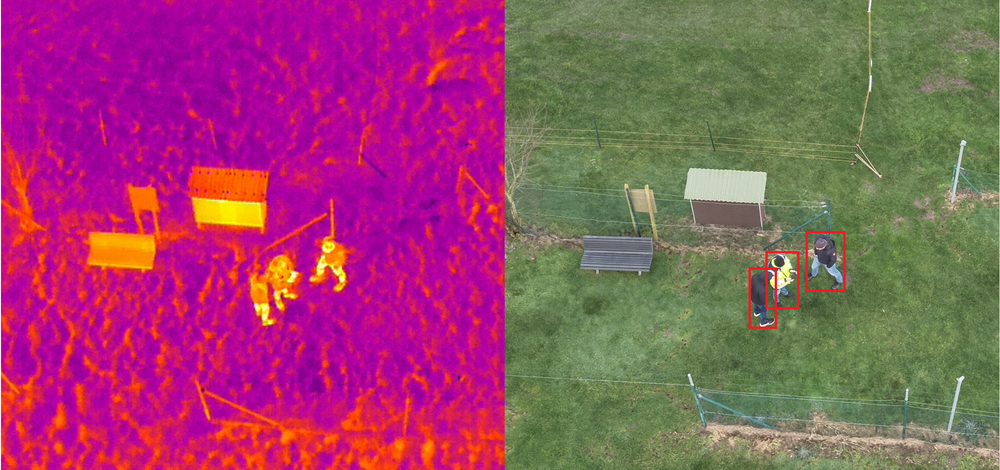

Our research focuses on key areas of sensor and environmental perception. One focus is the improvement of flight state estimation by reducing inertial drift and stabilizing flight, even in the event of satellite navigation failure, using mono- and stereo image data. In addition, we are developing algorithms for the autonomous search and tracking of moving ground objects. Another area is intelligent aerial imaging, where cameras are controlled for automatic image capture and the images are compiled into precise maps. Complementing this is the focus on real-time hazard mapping, which uses stereo and laser-based distance measurements to detect obstacles and prevent collisions. These research areas form the foundation for safe and versatile unmanned aviation.

Particularly in the field of automatic collision avoidance, also known as "sense/see-and-avoid," our research results are being applied to improve the detection of other aircraft. This enhances flight safety, which is of great importance for both civilian and military applications. Furthermore, optical navigation techniques are being evaluated that enable precise landings—even on the Moon and other celestial bodies. These approaches offer the possibility of landing in complex environments, such as rough terrain or during search and rescue missions. The synergies between these technologies make UAV highly adaptable tools for a wide range of scenarios.