Institute for AI Safety and Security

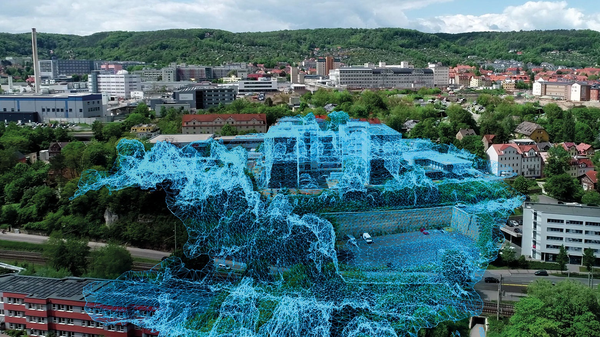

At the Institute for AI Safety & Security, we research and develop AI-related methods, processes, algorithms, technologies and execution and system environments. Our focus is on safe and standard-compliant AI, cyber security in open data and service ecosystems and AI, as well as autonomous mobility and logistics facilitation.