StereoInstancesOnSurfaces

The Stereo Instances on Surfaces Dataset (STIOS) is created for evaluation of instance-based algorithms. It is a representative dataset to achieve uniform comparability for instance detection and segmentation with different input modalities (RGB, RGB-D, stereo RGB). STIOS is mainly intended for robotic applications (e.g. object manipulation), which is why the dataset refers to horizontal surfaces.

Download

The dataset can be downloaded via Zenodo:

https://zenodo.org/record/4706907.

Code utilities are available on GitHub:

https://github.com/DLR-RM/stios-utils.

Sensors

STIOS contains recordings from two different sensors: a rc_visard 65 color and a Stereolabs ZED camera. Aside stereo RGB (left and right RGB image), the internally generated depth maps are also saved for both sensors. In addition, the ZED sensor provides normal images and point cloud data which are also provided in STIOS. Since some objects / surfaces have little texture and this would have a negative impact on the quality of the depth map, an additional LED projector with a random point pattern is used when recording the depth images (only used for rc_visard 65 color). Consequently, for the rc_visard 65 color STIOS includes RGB images and the resulting depth maps with and without a projected pattern.

The large number of different input modalities should enable evaluation of a wide variety of methods. As you can see in the picture, the ZED sensor was mounted above the rc_visard 65 lenses to get a similar viewing angle. This enables an evaluation between the sensors, whereby comparisons can be made about the generalization of a method with regard to sensors or the quality of the input modality.

Objects

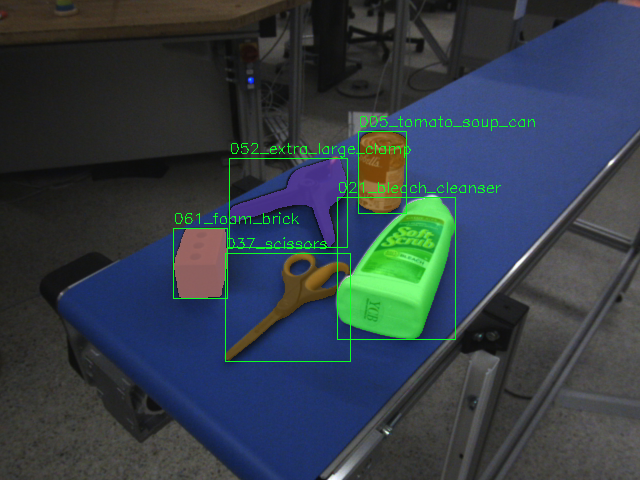

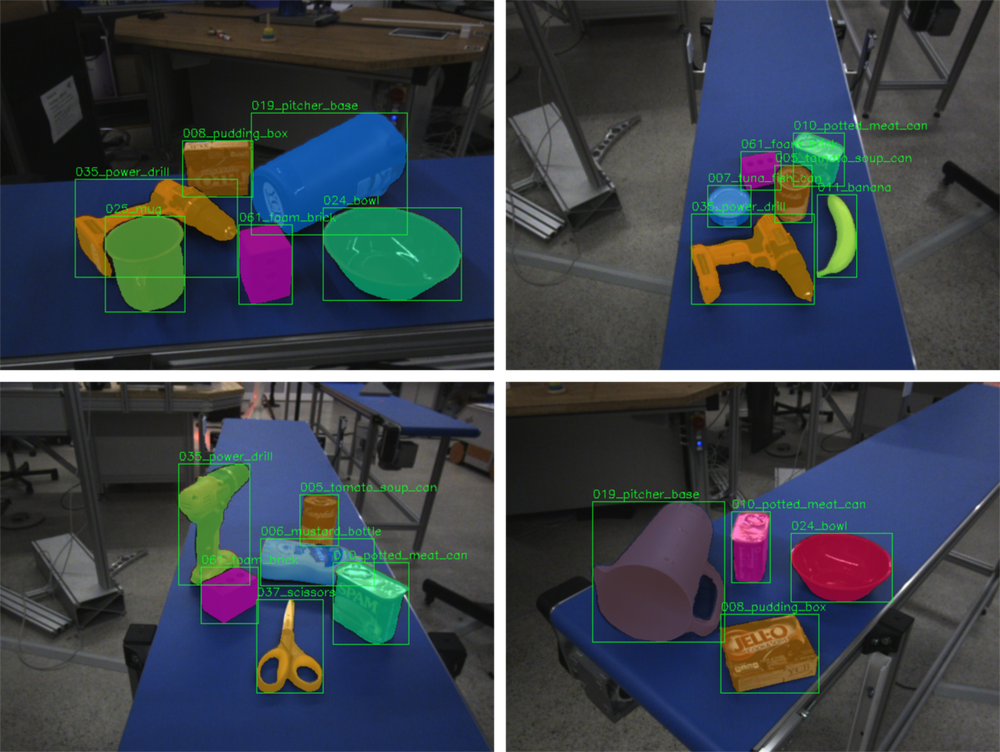

The dataset contains the following objects from the YCB video dataset and thus covers several application areas such as unknown instance segmentation, instance detection and segmentation (detection + classification):

003_cracker_box, 005_tomato_soup_can, 006_mustard_bottle, 007_tuna_fish_can, 008_pudding_box, 010_potted_meat_can, 011_banana, 019_pitcher_base, 021_bleach_cleanser, 024_bowl, 025_mug, 035_power_drill, 037_scissors, 052_extra_large_clamp, 061_foam_brick.

Due to the widespread use of these objects in robotic applications there are 3D models for each of the objects which can be used to generate synthetic training data for e.g. instance detection based on RGB-D. In order to guarantee an evenly distributed occurrence of the 15 objects, 4-6 random objects are selected by machine for each sample. The alignment of the objects is either easy (objects do not touch) or difficult (objects may touch or lie on top of each other).

Surroundings

The data set contains 8 different environments in order to cover the variation of environmental parameters such as lighting, background or scene surfaces. Scenes for the data set were recorded in the following environments: office carpet, workbench, white table, wooden table, conveyor belt, lab floor, wooden plank und tool cabinet.

The scenes where chosen carefully to ensure that they contain surfaces that are both friendly as well as challenging to stereo sensors. STIOS therefore contains low-texture surfaces (e.g. white table, conveyor belt) and texture-rich surfaces (e.g. lab floor, wooden plank). The above-mentioned variations of the surfaces and environments allows to evaluate methods in terms of robustness against and generalization to various environmental parameters.

For each scene surface, 3 easy and 3 difficult samples are generated from 4 manually set camera angles (approx. 0.3-1m distance). As the illustration shows, even with light object alignment the objects can occlude each other in some camera angles. The 6 samples per camera setting result in 24 samples per environment for each sensor, which results in a total of 192 samples per sensor.

Annotations

For each of these samples (192x2) all object instances in the left camera image were annotated manually (instance mask + object class). The annotations are available in the form of 8-bit grayscale images, which represent the semantic classes in the image. Since each object appears only once in the image, object instance masks can also be obtained from this format at the same time.

The dataset is structured as follows:

STIOS

|--rc_visard

| |--conveyor_belt

| | |--left_rgb

| | |--right_rgb

| | |--gt

| | |--depth

| | |--left_rgb_pattern

| | |--right_rgb_pattern

| | |--depth_pattern

| |--lab_floor

| |-- ...

|--zed

| |-- conveyor_belt

| | |--left_rgb

| | |--right_rgb

| | |--gt

| | |--depth

| | |--normals

| | |--pcd

| |--lab_floor

| |--...

We also provide code utilities which allow visualization of images and annotations of STIOS and contain various utility functions to e.g. generate bounding box annotations from the semantic grayscale images.

Citation

If STIOS is useful for your research please cite

@misc{durner2021unknown,

title={Unknown Object Segmentation from Stereo Images},

author={Maximilian Durner and Wout Boerdijk and Martin Sundermeyer and Werner Friedl and Zoltan-Csaba Marton and Rudolph Triebel},

year={2021},

eprint={2103.06796},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

STIOS in projects

Unknown Object Segmentation from Stereo Images

M. Durner, W. Boerdijk, M. Sundermeyer, W. Friedl, Z.-C. Marton, and R. Triebel. Unknown Object Segmentation from Stereo Images. In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2021. International Conference on Intelligent Robots and Systems, 27 Sep - 1 Oct, Prague. ISBN 978-166541714-3. ISSN 2153-0858.

This method enables the segmentation of unknown object instances that are located on horizontal surfaces (e.g. tables, floors, etc.). Due to the often incomplete depth data in robotic applications, stereo RGB images are used here. On the one hand, STIOS is employed to show the functionality of stereo images for unknown instance segmentation, and on the other hand, to make a comparison with existing work, which for the most part directly access depth data.

"What's This?" - Learning to Segment Unknown Objects from Manipulation Sequences

W. Boerdijk, M. Sundermeyer, M. Durner, and R. Triebel. 'What's This?' - Learning to Segment Unknown Objects from Manipulation Sequences. In: 2021 IEEE International Conference on Robotics and Automation, ICRA 2021. IEEE Robotics and Automation Society. 2021 IEEE International Conference on Robotics and Automation, ICRA 2021, 31 May - 05 June 2021, Xi'an, China / online (hybrid). ISBN 978-172819077-8. ISSN 1050-4729.

This work deals with the segmentation of objects that have been grasped by a robotic arm. With the help of this method it is possible to generate object-specific image data in an automated process. This data can then be used for training object detectors or segmentation approaches. In order to show the usability of the generated data, STIOS is used as an evaluation data set for instance segmentation on RGB images.

Similar datasets

There exist several datasets of objects on table-top surfaces with different annotation formats:

- OCID consists of 89 objects captured by two sensors with different physical object-to-object configurations (e.g. clearly separated, stacked etc.).

- M. Suchi, T. Patten, D. Fischinger, and M. Vincze (2019). EasyLabel: a semi-automatic pixel-wise object annotation tool for creating robotic RGB-D datasets, International Conference on Robotics and Automation (ICRA), 2021.

- OSD has similar physical arrangements for cylindrical and box-shaped objects.

- A. Richtsfeld, T. Mörwald, J. Prankl, M. Zillich, and M. Vincze. Segmentation of unknown objects in indoor environments, International Conference on Intelligent Robots and Systems (IROS), 2012.

- The TUW dataset presents highly cluttered scenes.

- A. Aldoma, T. Fäulhammer and M. Vincze. Automation of 'ground truth' annotation for multi-view RGB-D object instance recognition dataset, International Conference on Intelligent Robots and Systems (IROS), 2014.

- Others focus on object detection and pose estimation or point cloud annotations.

- Z. Sui, Z. Ye, and O. C. Jenkins. Never Mind the Bounding Boxes, Here's the SAND Filters, arXiv preprint arXiv:1808.04969 (2018).

- C. Rennie, R. Shome, K. E. Bekris, and A. F. De Souza. A dataset for improved rgbd-based object detection and pose estimation for warehouse pick-and-place, Robotics and Automation Letters (RA-L), 2016.

- A. Ecins, C. Fermüller, and Y. Aloimonos. Cluttered scene segmentation using the symmetry constraint, International Conference on Robotics and Automation (ICRA), 2016.