Texture-Based Tracking of Rigid Body Motion in 6-DoF (2004)

The Problem

When a rigid object moves in 3D space relative to a camera, it is often interesting to know how its relative pose changes in its full 6 degrees of freedom (DoF). The problem of 6-DoF tracking arises in the context of numerous applications within and beyond robotics, such as manipulation of moving objects, navigation of a mobile robot or vehicle, or pose estimation of a handheld sensing device.

The problem of visual object tracking is characterized by the following features:

- the projective camera model for accurate tracking is numerically expensive,

- the object may be partially occluded, e.g., by a hand of a person holding it,

- object or camera motion changes the view, and hence the appearance, of the object,

- object motion may change the illumination, and hence the appearance, of the object.

Existing tracking algorithms rely mostly on landmarks which have to be easy and robust to detect. When natural landmarks such as edges or corners are used, only polyhedral objects can be tracked. On the other hand, attaching artificial landmarks to the objects is often not desirable or not possible.

Methods

In this research project, an approach is developed which does not rely on landmarks but relies on an a-priori known geometrical model with an optional reference image of the object. Often this is not a real restriction since the object model has to be known anyway in order to carry out specific grasping and manipulation tasks.

The approach is based on matching of two camera views of the tracked object. Both images of the object are mapped projectively onto its geometric surface model given a pose hypothesis. From an initial crude estimate of the object pose, the pose estimate is varied until the two mapped images produce the least sum of squared differences. Minimization is carried out by a sequence of Newton steps.

Two approaches can be distinguished based on the number of cameras used: monocular tracking and binocular tracking.

In the case of monocular tracking, the approach consists in matching the current view of an object obtained from a single camera with a reference view of that object [1]. The variation of the intensity image due to changes in illumination has to be compensated.

In the case of binocular tracking, a stereo camera system is used to capture two images at the same time but from different positions [2]. The baseline of the camera system can be assumed small compared to the distance to the object. Therefore, the illumination difference can be neglected.

Results

Monocular tracking: First, a reference image of the object (surface patch) is captured and registered manually to the surface model. The figures depict the registered surface for a soda bottle and for a bust, i.e., a free-form surface. Also shown are three frames of a tracking sequence with the soda bottle. The tracked model points are colored yellow. The bottle is stationary here, so no correction for changing illumination is necessary. There is also a video of tracking the bust. Thanks to optimizing the computation and code, a minimization step is carried out in 5 ms on a P4 2GHz, leading to a potential tracking rate of 200Hz. Moreover, an accuracy of 1 degree in rotation and 3 mm in translation parameters is achieved.

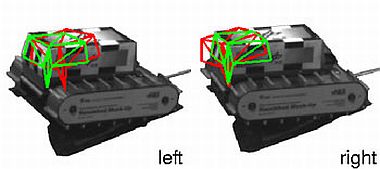

Binocular tracking: Here, no reference image has to be taken from the object. The approach was tested on the pose refinement problem for a planetary vehicle. Shown is the pose hypothesis before (red) and after the refinement (green), projected into the left and right camera images.

Publications

[1] Wolfgang Sepp and Gerd Hirzinger. "Real-Time Texture-Based 3-D Tracking" In Proceedings of the 25th DAGM Symposium on Pattern Recognition (DAGM 2003), page 330-337, Deutsche Arbeitsgemeinschaft für Mustererkennung e.V., Magdeburg (Germany), September 10-12, 2003; Lecture Notes in Computer Science 2781, Springer 2003, ISBN 3-540-40861-4

[2] Wolfgang Sepp and Gerd Hirzinger. "Featureless 6DoF Pose Refinement from Stereo Images" In 16thInternational Conference on Pattern Recognition (ICPR 2002), Volume 4, page 17-20, International Association for Pattern Recogni-tion (IAPR), Quebec City (Canada) August 11-15, 2002